With the READI Chicago program, Heartland Alliance connects individuals who are most at risk of gun violence with rapid employment in paid transitional jobs, cognitive behavioral therapy, and support services in order to decrease violence and create viable opportunities for a better future. It's a one of a kind program bringing together community organizations, all with different systems in place.

Our challenge was to help them identify better ways to collect data and share relevant information by creating a daily feedback tool.

Client: Heartland Alliance | Company: MU/DAI | Role: Design Research Lead & Design Oversight

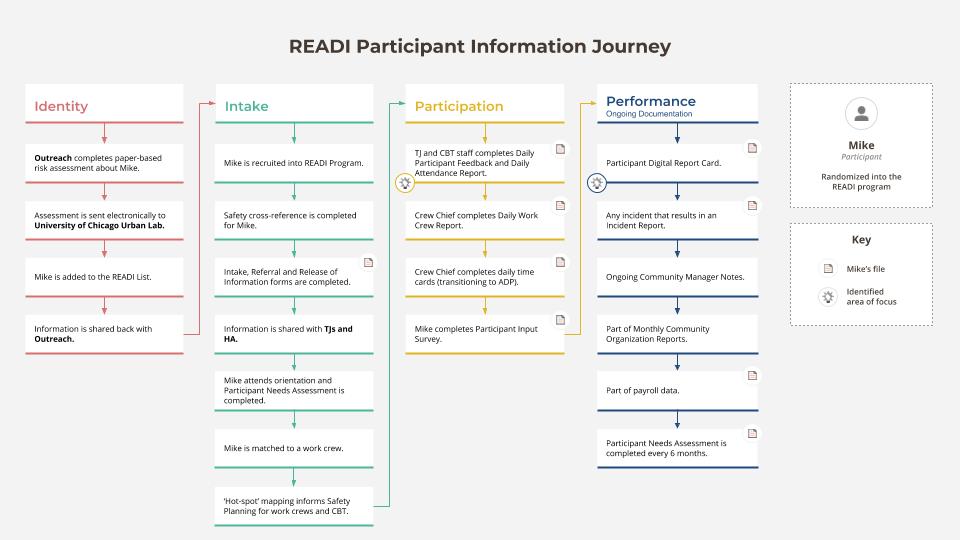

We spent time with the stakeholders reviewing the overall program goals and structure and reviewed types of data collected and methods used. Then we mapped the journey of participant information and used this information to brainstorm potential improvements to processes and tools. We highlighted the areas that had the most potential impact and sketched preliminary ideas.

We went into the field right away since the future users of our solution weren’t located in the main Heartland Alliance office. We went to all three of the READI program sites in Chicago.

Since this is a unique program bringing together different non-profit organizations, each site was distinct in needs, approach and setup. Even the forms they used to collect the information on participants varied greatly.

Based upon what we learned in the field, we realized that while this tool was there to collect data, an even more important purpose was to support conversations. These conversations had to happen with participants, staff and even across organizations. We created scenarios to document some of the key moments and data points that would support these important conversations.

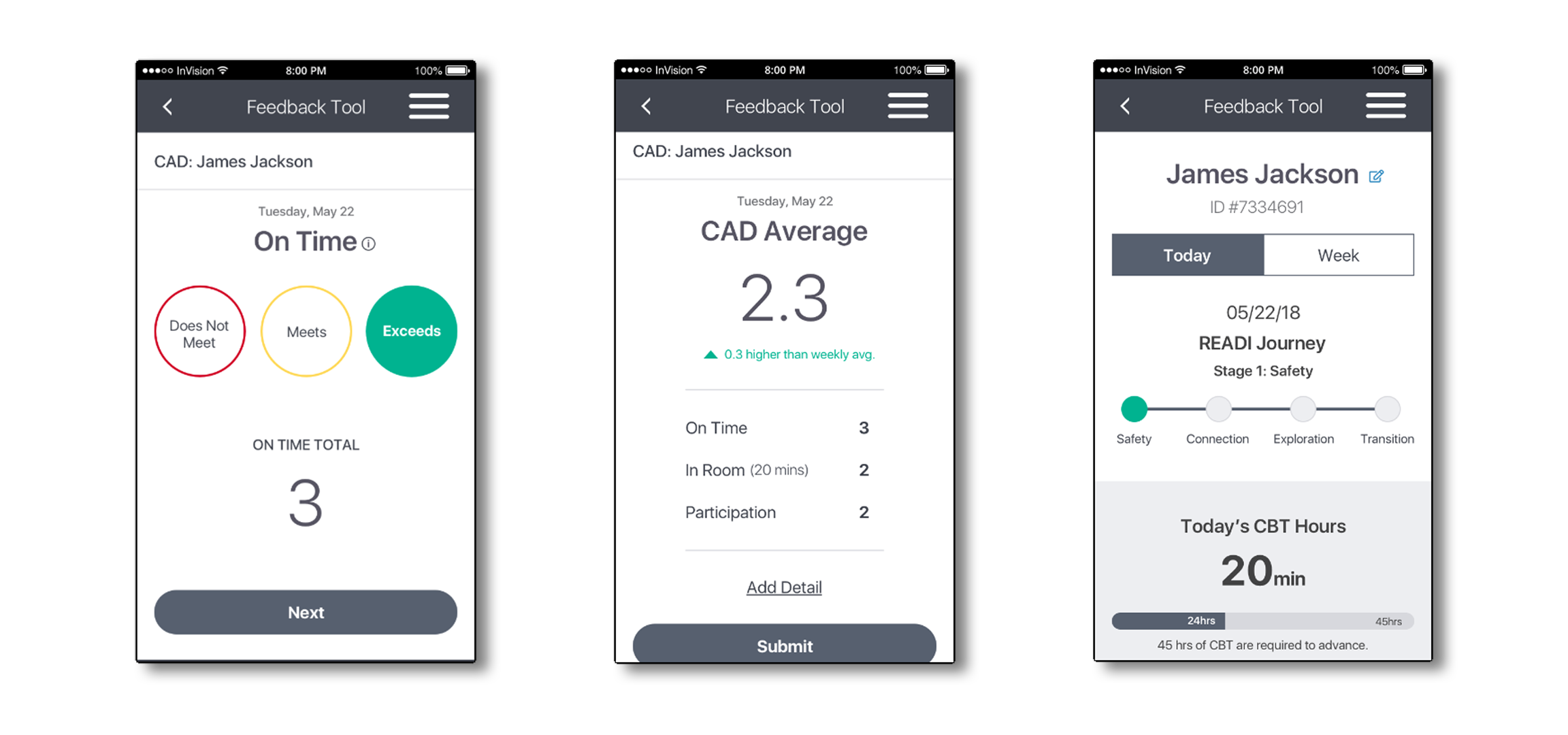

As we moved into design, we used our scenarios to guide the process. We also referenced the key themes from our research to inform our decisions.

Our key themes: increasing transparency, supporting accountability, providing a sense of progress, enabling team communication, scalability, and bridging the digital divide.

We had to keep it in mind that many of the screens needed to be viewed by two people at a time: a participant and the staff member giving/ reviewing feedback with them. However, in other parts of the tool, we needed to ensure privacy for the participant records so an individual's scores couldn't be accidentally viewed by other participants.

We went back to the program sites to show staff members our prototype. This gave us a chance to see how they interpreted different parts of the design.

We previously learned that color coding was being used at one of the sites for the feedback categories and they were excited to see this color coding reflected in the prototype, making it easy to glance and see how a participant performed.

We also discussed other needs that could be met and explored additional feedback details the staff may want in this tool. We added these to the backlog and created a few additional future state screens to capture these needs.

This was a quick moving project with only 4 weeks of development time scheduled for phase one. However, over the course of the project we had uncovered a wide range of needs that could be supported with this tool.

In order to determine what really needed to be in our MLP, we held a prioritization workshop with our stakeholders.

We walked the stakeholder through our wireframes and the user stories related to each set of screens. Then, we had them vote on the user stories they felt were a must, should, could or won't for the initial build of the tool.